The DRY Principle is Bad Advice

The DRY principle is probably the first software design concept you learn when you start coding. It sounds very serious and convincing, after all, it has an acronym! Furthermore, the idea of not repeating ourselves deeply resonates with the reason many of us enjoy programming computers: to liberate us from mind-numbing repetitious work. It is a concept that is very easy to grasp and explain (I still have to google Liskov substitution whenever I discuss SOLID design) and applying it usually gives that wonderful buzz your brain gets when it matches a pattern. What’s not to like?

Well, preventing repetition in code is often a good idea. But sometimes, I find, it is counterproductive in ways that I’d like to discuss in this post.

DRY code can result in tight-coupling

Sharing a single piece of code between two callers often is a great idea. If you have two services that need to send transactional email, that fetches some details about the user, renders a template and send the email out, it might look something like this:

class OrderService:

# ...

def send_order_receipt(self, user_id, order_id):

user = UserService.get(user_id)

subject = f"Order {order_id} received"

body = f"Your order {order_id} has been received and will be processed shortly"

content = render('user_email.html', user=user, body=body)

email_provider.send(user.email_address, subject, content)

class PaymentService:

# ...

def send_invoice(self, user_id, order_id):

user = UserService.get(user_id)

subject = f"Payment for {order_id} received"

body = f"Payment for order {order_id} has been received, thank you!"

content = render('user_email.html', user=user, body=body)

email_provider.send(user.email_address, subject, content)

Look at all of that repeated code! It’s very tempting to DRY it up with:

def send_transaction_email(user_id, order_id, subject, body):

user = UserService.get(user_id)

content = render('user_email.html', user=user, body=body)

email_provider.send(user.email_address, subject, content)

Nice! We extracted the common code between the services to a helper function and now our services look like this:

class OrderService:

# ...

def send_order_receipt(self, user_id, order_id):

subject = f"Order {order_id} received"

body = f"Your order {order_id} has been received and will be processed shortly"

send_transaction_email(user_id, ,order_id, subject, body)

class PaymentService:

# ...

def send_invoice(self, user_id, order_id):

subject = f"Payment for {order_id} received"

body = f"Payment for order {order_id} has been received, thank you!"

send_transaction_email(user_id, ,order_id, subject, body)

Much cleaner, don’t you think?

One of the promises of DRY is that it will allow us to evolve our software better; business requirements and engineering constraints change all the time, and if we need to change the way this piece of code behaves, we only change it once and it will be reflected everywhere.

In the example above, we can pretty easily change the way we fetch user information, and even the email provider we use can be changed with ease.

But applied blindly, DRY code can do the exact opposite of facilitating change. Consider in our example, what if because of a business decision the PaymentService’s invoice mail needs to use a different template, how would we facilitate that? Or if the OrderService is now required to retrieve a list of purchased items and feed that into the email template? Our extraction of shared logic into the send_transaction_email method caused the OrderService and the PaymentService to become tightly coupled: you can’t change one without the other.

As my good friend Ohad Basan once taught me:

“When you encounter a class named Helper, the last thing it will do is help you.”

DRY code can be harder to read

Let’s take another example. Assume we are writing unit tests for a web-server we are working on, we have two tests so far:

func TestWebserver_bad_path_500(t *testing.T) {

srv := createTestWebserver()

defer srv.Close()

resp, err := http.Get(srv.URL + "/bad/path")

if err != nil {

t.Fatal("failed calling test server")

}

if resp.StatusCode != 500 {

t.Fatalf("expected response code to be 500")

}

body, err := ioutil.ReadAll(resp.Body)

if err != nil {

t.Fatal("failed reading body bytes")

}

if string(body) != "500 internal server error: failed handling /bad/path" {

t.Fatalf("body does not match expected")

}

}

func TestWebserver_unknown_path_404(t *testing.T) {

srv := createTestWebserver()

defer srv.Close()

resp, err := http.Get(srv.URL + "/unknown/path")

if err != nil {

t.Fatal("failed calling test server")

}

if resp.StatusCode != 404 {

t.Fatalf("expected response code to be 400")

}

if resp.Header.Get("X-Sensitive-Header") != "" {

t.Fatalf("expecting sensitive header not to be sent")

}

}

Plenty of duplication to refactor! Both tests do pretty much the same: they spin up a test server, make a GET call against it and then run simple assertions on the http.Response.

We can express this with a helper function that does exactly this:

func runWebserverTest(t *testing.T, request Requester, validators []Validator) {

srv := createTestWebserver()

defer srv.Close()

response := request(t, srv)

for _, validator := range validators {

validator.Validate(t, response)

}

}

I’m redacting here the exact definitions of a Requester and a Validator to save space but you can see the full implementation in this gist.

Now our tests can be refactored to be nice and DRY:

func Test_DRY_bad_path_500(t *testing.T) {

runWebserverTest(t,

getRequester("/bad/path"),

[]Validator{

getStatusCodeValidator(500),

getBodyValidator("500 internal server error: failed handling /bad/path"),

})

}

func Test_DRY_unknown_path_404(t *testing.T) {

runWebserverTest(t,

getRequester("/unknown/path"),

[]Validator{

getStatusCodeValidator(404),

getHeaderValidator("X-Sensitive-Header", ""),

})

}

A few interesting things to note about this change:

- It will be faster to write new, similar tests. If we have 15 different endpoints which behave similarly and require similar assertions, we can express them in a very concise and efficient way.

- Our code became significantly harder to read and extend. If our test fails due to some change in the future, the poor person debugging the issue will have to do a lot of clicking around until they have a good grasp of what’s going on: we replaced trivial, flat, straight-forward code, with clever abstractions and indirections.

But when should we DRY our code?

Pulling common code into libraries that can be shared between applications is a proven and effective practice that’s well established in our industry, surely we don’t mean to say we should stop doing so!

To help us decide when we should DRY code I would like to present an idea from a terrific book that was recently released in an updated 2nd edition: The Pragmatic Programmer by Andy Hunt and Dave Thomas:

“A thing is well designed if it adapts to the people who use it. For code, that means it must adapt by changing. So we believe in the ETC principle: Easier to Change. ETC. That’s it. As far as we can tell, every design principle out there is a special case of ETC. Why is decoupling good? Because by isolating concerns we make each easier to change. ETC. Why is the single responsibility principle useful? Because a change in requirements is mirrored by a change in just one module. ETC.

Source: The Pragmatic Programmer, 2nd Edition, Topic 8: The Essence of Good Design

In this gem of a chapter, Hunt and Thomas explore the idea that there is a meta-principle for evaluating design decisions that often collide - how easy is it going to be to evolve our codebase if we pick this specific path? In our discussions above we showed two ways in which DRYing code can make it harder to change, either by tight-coupling or by hampering readability - going against the meta-principle of ETC.

Being cognizant of these possible implications of DRY code can help decide when we should not DRY our code; to learn when we should do so, let’s return to the original scripture and re-examine this principle.

The DRY principle was originally introduced to the world by the same Hunt and Thomas in the 2000 edition of the book, and so they write:

“Every piece of knowledge must have a single, unambiguous, authoritative representation within a system. The alternative is to have the same thing expressed in two or more places. If you change one, you have to remember to change the others, [..]. It isn’t a question of whether you’ll remember: it’s a question of when you’ll forget.

Thomas, David. The Pragmatic Programmer, 2nd edition . Topic 9 - The Evils of Duplication

Notice that the DRY principle originally does not deal at all with the repetition or duplication of code, instead, it discusses the danger of not having a single source-of-truth representation for a piece of knowledge in the system.

When we refactored the send_transaction_email method to replace duplicated code between the OrderService and the PaymentService we confused between duplicated code and duplicated knowledge. If two procedures are identical at a certain point in time, there is no guarantee that they will continue to be required to be so in the future. We must be able to differentiate between procedures that are coincidentally shared and those that are essentially shared.

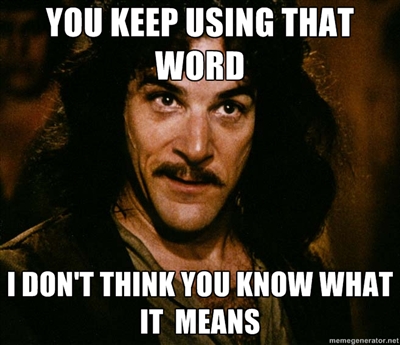

Coming full circle, I must admit, the DRY principle is a pretty important piece of advice after all; we should just remember that despite it being thrown around all the time:

Conclusion

- Removing duplication feels good, but is often wrong.

- The DRY principle is not about code duplication.

- The meta-principle of good design is: ETC.